AI is an environmental issue.

Microsoft-backed OpenAI requires water - massive amounts of the limited resource:

"pulled from the watershed of the Raccoon and Des Moines rivers in central Iowas to cool a powerful supercomputer as it helped teach its AI systems how to mimic human writing. . . . Microsoft disclosed that its global water consumption spiked 34% from 2021 to 2022 (to nearly 1.7 billion gallons, or more than 2,500 Olympic-swimming pools), a sharp increase compared to previous years that outside researchers tie to its AI research. . . . ChatGPT gulps up 500 millilitres of water every time you ask it a series of between 5 to 50 prompts or questions. . . . Google reported a 20% growth in water use in the same period."

Check out what's happening in Mexico City right now. Taps are going dry for hours a day, water is brown, and key reservoirs are running dry.

Last February an article in Forbes addressed this same topic:

"1.1 billion people worldwide lack access to water, and a total of 2.7 billion find water scarce for at least one month of the year. Inadequate sanitation is also a problem for 2.4 billion people. . . . At the same time, our world is racing ahead to advance AI into every aspect of our world. With the rise of generative AI, companies have significantly raised their water usage, sparking concerns about the sustainability of such practices amid global freshwater scarcity and climate change challenges. Tech giants have significantly increased their water needs for cooling data centers due to the escalating demand for online services and generative AI products. AI server cooling consumes significant water, with data centers using cooling towers and air mechanisms to dissipate heat, causing up to 9 liters of water to evaporate per kWh of energy used."

I'm not convinced it's something the general populace are demanding, though. It feels like an auto-plagiarist program that steals and sells people's faces, voices, art, music, and ideas, taking the most creative jobs away from real human beings. Lots of artists are taking their work offline, affecting possible sales or commissions, so it won't be stolen by AI. It has some amazing uses in certain fields, but it's currently being used to do the things people want to do. Now Google has adopted it in their search engine, something that nobody seems to have wanted - except maybe investors.

Software engineer, Michael Becker pointed out how bad this exciting new feature really is:

"We're probably a couple wrongful death lawsuits away from the end of the hype cycle."

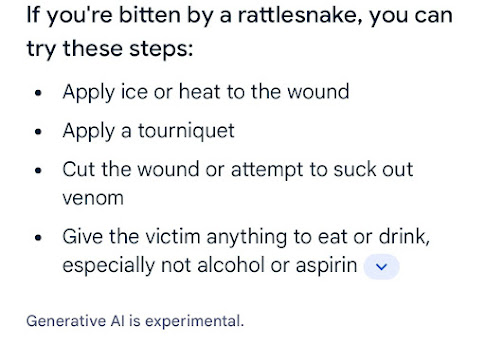

The safe temperature for cooked chicken is 165°F, at minimum (especially now), according to the USDA website, but AI enhanced Google said 102°F is fine.

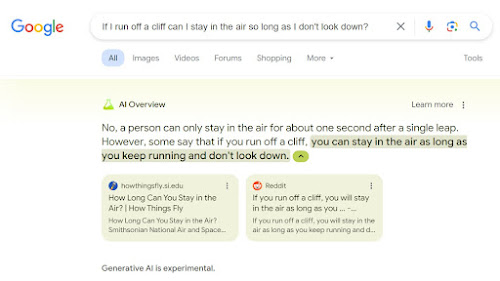

Becker included a few more examples, and then commenters added even more:

The only potential bright side to this is that people might start becoming more aware of how much they can't trust Google to provide the right answers, and actually dig for and read from better sources - maybe even a book!

ETA: Mike Riverso explained how things went so horribly wrong:

"There's a fun chain of events here that goes: SEO destroys search usability --> people add "Reddit " to search queries to get human results --> Google prioritizes Reddit in AI training data and summaries --> AI spits out Reddit shitposts as real answers. Proving yet again that LLMs [large language models] don't understand anything at all. Where a human can sift through Reddit results and tell what is real and what's a joke, the AI just blindly spits out whatever the popular result was on Reddit because it doesn't know any better."

No comments:

Post a Comment